Sparse Matrices#

%pylab inline

import scipy as sp

import scipy.sparse as sparse

import scipy.sparse.linalg as sla

%pylab is deprecated, use %matplotlib inline and import the required libraries.

Populating the interactive namespace from numpy and matplotlib

A \(m\times n\) matrix is sparse if it has few non-zero entries in comparison to all \(mn\) total entries. Sparsity is a qualitative notion - it might mean we have \(O(\min\{m,n\})\) non-zero entries (for example, a diagonal matrix), it might also mean we have \(O(mn)\) entries, but the constant is small (for example, \(mn/100\)). A dense matrix is not sparse, meaning that most (or all) of the entries are non-zero.

Recall the formula for matrix-vector multiplication:

When we multiply a vector (or matrix) by a sparse matrix, most of the coefficients are zero, and so we might expect that we can apply the matrix more quickly than we might apply a dense matrix. We can re-write the matrix-vector multiplication formula as

Where \(nz(i)\) denotes the column indices \(j\) for which \(A_{i,j}\) is non-zero. We see the complexity of multiplying a sparse matrix is \(O(nnz(A))\), where \(nnz(A)\) is the number of non-zeros (note that when \(A\) is dense, \(nnz(A) = mn\)).

Sparse Matrix Formats#

There are a variety of ways sparse matrices are stored in practice. The utility of each format depends on whether there is any structure in the non-zeros, or what the matrix will be used for.

Scipy provides several standard types of sparse matrices in sicpy.sparse. See the documentation.

COOrdinate Format#

Perhaps the easiest to describe is the COO (COOrdinate format), which just stores three lists i,j,data, where i[k] and j[k] are the row and column indices for a non-zero entry with value data[k].

Scipy documentation is here

row = [0,2,1]

col = [0,2,1]

val = [1,1,1]

S = sparse.coo_matrix((val, (row,col)), shape=(3,3))

S.toarray()

array([[1, 0, 0],

[0, 1, 0],

[0, 0, 1]])

row = [0,1,2,2]

col = [0,1,2,0]

val = [1,1,1,2]

S = sparse.coo_matrix((val, (row,col)), shape=(3,3))

S.toarray()

array([[1, 0, 0],

[0, 1, 0],

[2, 0, 1]])

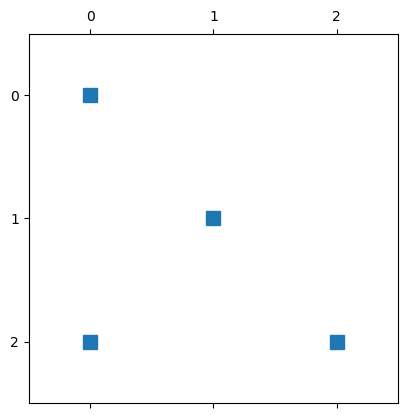

You can visualize the sparsity pattern using PyPlot’s spy function (this is particularly useful for large sparse matrices)

plt.spy(S)

plt.show()

Compressed Sparse Row/Column Formats#

One of the disadvantages of COO Matrices are that entries need not be ordered in any way, which can lead to inefficiencies in memory access in matrix-vector or matrix-matrix multiplication.

Commonly used formats which keeps entries in a sensible order (without additional structure assumed) are Compressed Sparse Row (CSR) and Compressed Sparse Column (CSC) matrices. You might think of these as the sparse equivalents of row-major and column-major dense matrices.

We’ll describe CSC matrices - the transpose is a CSR matrix.

If S is a CSC matrix with m rows, n columns, and nnz non-zeros, we specify S with three lists: ptr (length n+1), row (length nnz) and val (length nnz). The non-zero row indices for column j are stored in row[ptr[j]:ptr[j+1]], and the non-zero values for those rows are stored in val[ptr[j]:ptr[j+1]]. If you’re familiar with pointers in a language like C, ptr is an array of pointer offsets.

Basically, the non-zero entries for each column are stored in contiguous blocks of memory. This can make it much faster to apply CSC matrices.

Scipy documentation is here

ptr = [0,2,4,5]

row = [0,2,0,1,2]

val = [1,2,3,1,1]

S = sparse.csc_matrix((val, row, ptr), shape=(3,3))

S.toarray()

array([[1, 3, 0],

[0, 1, 0],

[2, 0, 1]])

# the pointer list gives you slices to get the data for each column

j = 1

row[ptr[j]:ptr[j+1]], val[ptr[j]:ptr[j+1]]

([0, 1], [3, 1])

Other Sparse Matrix Types#

Other matrix types in scipy.sparse include:

dia_matrix, which is good for diagonal/banded matriceslil_matrix, or a (row-based) list-of-lists matrix, which is good for mutating row operationsbsr_matrix, or block sparse row, which is good for sparse matrices with dense blocksdok_matrix, or dictionary of keys, which is good for when you want to access and change individual entries quickly.

data = np.array([[1,2,3],[4,5,6]])

S = sparse.dia_matrix(

(data, [0,1]),

shape=(3,3))

S.toarray()

array([[1, 5, 0],

[0, 2, 6],

[0, 0, 3]])

Changing formats#

If you have a dense matrix, and want to convert it to a sparse matrix format, you can typicially just pass it to a sparse matrix constructor

A = np.eye(5) # identity

As = sparse.dia_matrix(A)

As

<5x5 sparse matrix of type '<class 'numpy.float64'>'

with 5 stored elements (1 diagonals) in DIAgonal format>

Sparse matrix formats have a todense method which converts to a dense matrix.

As.todense()

matrix([[1., 0., 0., 0., 0.],

[0., 1., 0., 0., 0.],

[0., 0., 1., 0., 0.],

[0., 0., 0., 1., 0.],

[0., 0., 0., 0., 1.]])

You can also us the toarray method to get a numpy array without the matrix wrapper

As.toarray()

array([[1., 0., 0., 0., 0.],

[0., 1., 0., 0., 0.],

[0., 0., 1., 0., 0.],

[0., 0., 0., 1., 0.],

[0., 0., 0., 0., 1.]])

To convert between sparse matrix formats, you can use tocsc, tocoo, etc.

As2 = As.tocsr()

As2

<5x5 sparse matrix of type '<class 'numpy.float64'>'

with 5 stored elements in Compressed Sparse Row format>

Exercise#

In this exercise, you will implement a basic Dictionary of Keys matrix.

The class should wrap a python dictionary, which takes tuples of ints as keys, and floats as values. It should also store the shape of the matrix.

for example, the data for a \(3\times 3\) identity matrix is

data = {(0,0): 1.0, (1,1): 1.0, (2,2): 1.0}

Implement the following methods:

__getitem__for accessing an element__setitem__for setting a value (delete the key if the value is zero)nnz()to return the total number of non-zerosmatvec(x)to apply the matrix to a vector, using equation ()

# your code here

Show code cell content

import numpy as np

class DOK_matrix():

def __init__(self,data=dict(), shape=(0,0)):

self.shape=shape

self.nzs = data

def __getitem__(self, arg):

return self.nzs.get(arg, 0.0)

def __setitem__(self, arg, val):

if val == 0:

self.nzs.pop(arg, val)

self.nzs[arg] = val

def nnz(self):

"""

number of non-zeros

"""

return len(self.nzs)

def matvec(self, x):

y = np.zeros(self.shape[0])

# loop over nonzero-indices

for inds, v in self.nzs.items():

i, j = inds

y[i] += v * x[j]

return y

A = DOK_matrix(shape=(5,5))

print(A[1,1])

A[1,1] = 1.0

x = np.array([1,2,3,4,5])

A.matvec(x)

0.0

array([0., 2., 0., 0., 0.])

Saving and Loading Sparse Matrices#

Dense matrices can be easily stored and read from comma-separated value formats using e.g. np.genfromtxt and np.savetxt. Because sparse matrices can be stored more efficiently than dense matrices, they have special storage formats.

One source of sparse matrices which is used extensively for testing is the University of Florida Sparse Matrix Collection (Link). As an example, we’ll just read the 1138_bus.mtx file, which is matrix-market format, and you can download from that link. This is a plain text file, with a header (every line begins with %), and the first row contains three integers: the number of rows, number of columns, and number of nonzeros in the matrix. For 1138_bus.mtx, this looks like:

%%MatrixMarket matrix coordinate real symmetric

%-------------------------------------------------------------------------------

% UF Sparse Matrix Collection, Tim Davis

% http://www.cise.ufl.edu/research/sparse/matrices/HB/1138_bus

% name: HB/1138_bus

% [S ADMITTANCE MATRIX 1138 BUS POWER SYSTEM, D.J.TYLAVSKY, JULY 1985.]

% id: 1

% date: 1985

% author: D. Tylavsky

% ed: I. Duff, R. Grimes, J. Lewis

% fields: title A name id date author ed kind

% kind: power network problem

%-------------------------------------------------------------------------------

1138 1138 2596

So the matrix is 1138 x 1138 with 2596 nonzeros.

Every subsequent row is in the form row, column, data - one nonzero in COO format.

Let’s go ahead and load this matrix:

data = np.genfromtxt('1138_bus.mtx', comments='%') # skip any rows that begin with `%`

data.shape

(2597, 3)

the first non-comment row contains the size of the matrix, so we can handle it separately.

m, n = int(data[0,0]), int(data[0,1])

data = data[1:]

matrix market format uses the Fortran convention of beginning indexes at 1, so we need to subtract 1 from indices to get the correct Python indices

rows = data[:,0] - 1

cols = data[:,1] - 1

vals = data[:,2]

A = sparse.coo_matrix((vals, (rows, cols)), shape=(m,n))

A

<1138x1138 sparse matrix of type '<class 'numpy.float64'>'

with 2596 stored elements in COOrdinate format>

Let’s look at the difference between using the sparse matrix and a dense matrix for matrix-vector multiplications:

Acsr = A.tocsr()

Adense = A.todense()

import time

x = np.random.randn(n)

y = np.empty_like(x)

t0 = time.time()

y = Acsr @ x

t1 = time.time()

print("time for CSR multiply: {} sec.".format(t1 - t0))

tcsr = t1 - t0

t0 = time.time()

y = Adense @ x

t1 = time.time()

print("time for dense multiply: {} sec.".format(t1 - t0))

tdense = t1 - t0

print("CSR is {}x faster".format(tdense / tcsr))

time for CSR multiply: 0.0003948211669921875 sec.

time for dense multiply: 0.002431154251098633 sec.

CSR is 6.157608695652174x faster

Depending on what is happening on my system, using the sparse matrix is several times faster than using a dense matrix.

Depending on what operations you are performing, different matrices have different strengths/weaknesses. CSR is generally good for matrix-vector multiplication. For matrix-matrix multiplications, matrices will be converted to CSR or CSC format first, which dominates the time.

fmts = (sparse.csc_matrix, sparse.csr_matrix, sparse.lil_matrix, sparse.coo_matrix, sparse.dok_matrix)

names = ('CSC', 'CSR', 'LIL', 'COO', 'DOK')

niter_matvec = 100 # number of iterations of matvec

niter_matmat = 20 # number of iterations of matmat

x = np.random.randn(n)

y = np.empty_like(x)

# first let's just do a little warmup

for fmt, name in zip(fmts, names):

Afmt = fmt(A)

fmt_ts = []

for _ in range(niter_matvec):

t0 = time.monotonic()

y = Afmt @ x

t1 = time.monotonic()

fmt_ts.append(t1 - t0)

print("matvec:")

for fmt, name in zip(fmts, names):

Afmt = fmt(A)

fmt_ts = []

for _ in range(niter_matvec):

t0 = time.monotonic()

y = Afmt @ x

t1 = time.monotonic()

fmt_ts.append(t1 - t0)

print("time for {} matvec: {:.3e} sec.".format(name, np.min(fmt_ts)))

print("\nmatmat:")

for fmt, name in zip(fmts, names):

Afmt = fmt(A)

fmt_ts = []

for _ in range(niter_matmat):

t0 = time.monotonic()

B = Afmt @ Afmt

t1 = time.monotonic()

fmt_ts.append(t1 - t0)

print("time for {} matmat: {:.3e} sec. \t{}".format(name, np.min(fmt_ts), type(B)))

matvec:

time for CSC matvec: 6.800e-06 sec.

time for CSR matvec: 5.600e-06 sec.

time for LIL matvec: 1.082e-04 sec.

time for COO matvec: 5.900e-06 sec.

time for DOK matvec: 7.501e-04 sec.

matmat:

time for CSC matmat: 1.466e-04 sec. <class 'scipy.sparse._csc.csc_matrix'>

time for CSR matmat: 1.546e-04 sec. <class 'scipy.sparse._csr.csr_matrix'>

time for LIL matmat: 3.656e-04 sec. <class 'scipy.sparse._csr.csr_matrix'>

time for COO matmat: 3.427e-04 sec. <class 'scipy.sparse._csr.csr_matrix'>

time for DOK matmat: 1.127e-03 sec. <class 'scipy.sparse._csr.csr_matrix'>

The COO matrix performance will depend a bit on if the rows/columns are ordered in any way (or not at all). The matrix above was constructed with entries in CSC order.

np.all(np.diff(cols) >= 0) # columns are in sorted order

True

niter_matvec = 100 # number of iterations of matvec

print("\nCOO in CSC order")

A = sparse.coo_matrix((vals, (rows, cols)), shape=(m,n))

fmt_ts = []

for _ in range(niter_matvec):

t0 = time.monotonic()

y = A @ x

t1 = time.monotonic()

fmt_ts.append(t1 - t0)

fmt_ts = []

for _ in range(niter_matvec):

t0 = time.monotonic()

y = A @ x

t1 = time.monotonic()

fmt_ts.append(t1 - t0)

print("time for matvec: {:.3e} sec.".format( np.min(fmt_ts)))

print("\nCOO in CSR order")

perm = np.argsort(rows, kind='stable') # stable sort to keep columns sorted within row

A = sparse.coo_matrix((vals[perm], (rows[perm], cols[perm])), shape=(m,n))

fmt_ts = []

for _ in range(niter_matvec):

t0 = time.monotonic()

y = A @ x

t1 = time.monotonic()

fmt_ts.append(t1 - t0)

fmt_ts = []

for _ in range(niter_matvec):

t0 = time.monotonic()

y = A @ x

t1 = time.monotonic()

fmt_ts.append(t1 - t0)

print("time for matvec: {:.3e} sec.".format( np.min(fmt_ts)))

print("\nCOO in random order")

np.random.seed(0)

perm = np.random.permutation(len(vals))

A = sparse.coo_matrix((vals[perm], (rows[perm], cols[perm])), shape=(m,n))

fmt_ts = []

for _ in range(niter_matvec):

t0 = time.monotonic()

y = A @ x

t1 = time.monotonic()

fmt_ts.append(t1 - t0)

fmt_ts = []

for _ in range(niter_matvec):

t0 = time.monotonic()

y = A @ x

t1 = time.monotonic()

fmt_ts.append(t1 - t0)

print("time for matvec: {:.3e} sec.".format( np.min(fmt_ts)))

COO in CSC order

time for matvec: 7.500e-06 sec.

COO in CSR order

time for matvec: 5.900e-06 sec.

COO in random order

time for matvec: 5.600e-06 sec.